| miscellaneous |

|---|

|

articles Århus Onsdag My CV PHP info spam? |

| projects |

|---|

|

Overview Timian SAPDB on Linux/390 Pleiades |

| mailing lists |

|---|

|

admin jokes timian-general timian-announce dansk listen |

| other peoples stuff |

|---|

|

ammonite cluster |

| feedback |

|---|

| tell me |

|

Copyright © Per Jessen, 2003-2006. per@computer.org Unless otherwise indicated, verbatim copying and distribution of this entire article is permitted in any medium, provided this notice is preserved. |

The Pleiades cluster - round 2.

Pleiades I was a small 4-node cluster I built around 1998 while we were still living in England. This article is more concerned with the building of Pleiades II, but also provides some historical/background information on Pleiades I.

Summary

The Pleiades II cluster was built using standard techniques and standard information found on the Internet. There is plenty of material available on how to build a Beowulf-style computing cluster. This article does not add much information over-all, and is mainly intended to serve as an account of an actual computing cluster project.

Note: for the time being - July 2003 - this is work in progress; check back frequently for updates.

Pleiades I

Pleiades was named after the Pleiades star cluster: a famous open star cluster in the constellation Taurus; catalogued as M45. (of course, this is hardly the only computing cluster named Pleiades - see other Pleiades clusters).

Pleiades I was entirely meant as an educational exercise in building a cluster and certainly served little other purpose, although it was also very helpful in the early useability tests of the J1-card (see ENIDAN Technologies). The physical frame was rather crude, being made up of wood and plastic, but built for minimal size. Pleiades I ran for about 4 years, even survived the relocation of the ENIDAN facilities from London to Zürich, but then slowly started showing its age sometime towards the end of 2002 where 3 or 4 harddisks started failing. Each node had 2 x 270Mb Quantum Maverick harddrives set up as a RAID-0 array. I had bought a full crate (20 pcs) of these drives on eBay, and given their age a slow degradation was hardly unexpected.

However, I was busy elsewhere at the time and decided to switch off the cluster for the time being, with the intent of replacing the drives later etc. Later turned out to be beginning of June 2003.

Pleiades II

When I built Pleiades I it was actually intended to be an 8-node cluster. The additional 4 boards, CPUs and memory were all ready but after completing the first 4 nodes, this turned out to be sufficient for the initial purpose.

Pleiades II was built partially under a contract with ENIDAN Technologies Ltd., and again also partially as a pure educational exercise. This time it was designed from scratch as an 8-node cluster.

Node configuration

Each of the 8 Pleiades II nodes consists of the following:

- Mainboard: P6NDI Dual Pentium Pro by AIR.

- Memory: 196Mb EDO RAM (2x64Mb + 2x32Mb)

- Network: 3COM 3C509B

- Harddisk: IDE-drive Quantum AC21000H, 1083Mb

The 8 mainboards were bought sometime in 1998, just about the time when AIR (Advanced Integration Research) was closing down. I believe I got them fairly cheap, although I wasn't aware at the time that AIR was about to close. The 16 x 200MHz PentiumPros were all bought 2nd hand, around the same time, at the Computer Exchange on Tottenham Court Road in London. The 16 x 64Mb EDO RAM SIMMs were bought bulk from a company in Germany - as were the 16 PentiumPro heatsinks & fans (I think I bought a crate of 32). The rest, the additional 16 x 32Mb EDO RAM SIMMs, the 8 Quantum 1Gb drives, a stack of 20 3C509 cards were all bought on eBay, probably sometime early 2002.

You will notice that the overall specs hardly make for a high-end computing cluster. They do however make quite well for a ...

Master node

Power supply

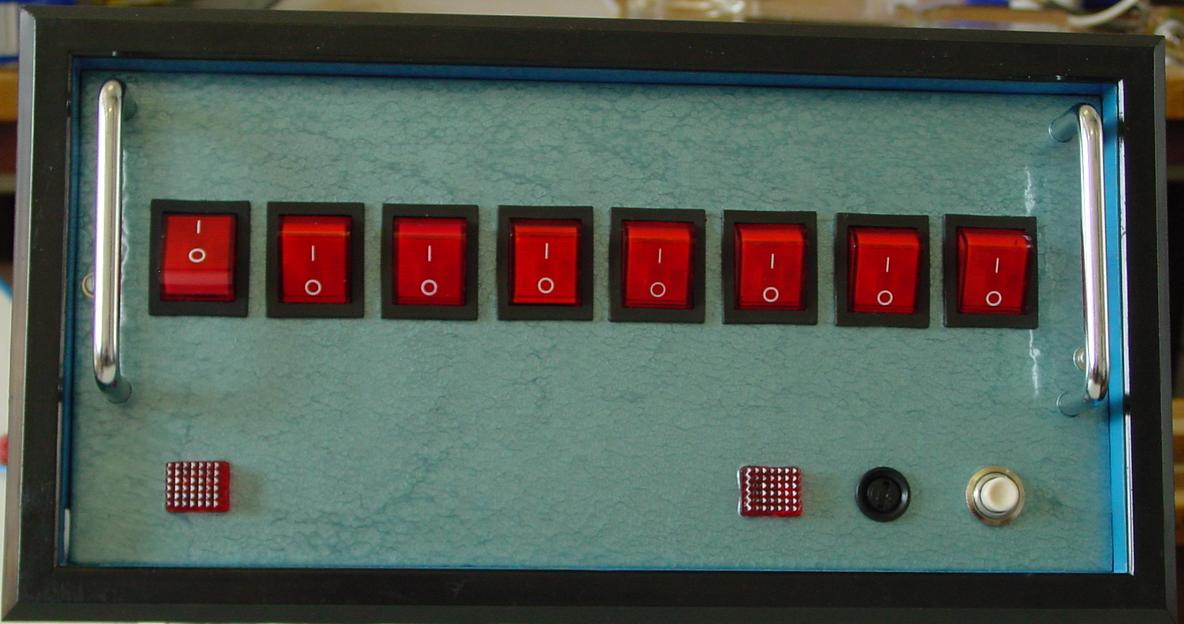

Pleiades I had 4 AT power-supplies, all powered through a single on/off

switch. This arrangement worked fine until maintenance was needed. For Pleiades II it was clear I needed individual power

control for each node.

Pleiades I had 4 AT power-supplies, all powered through a single on/off

switch. This arrangement worked fine until maintenance was needed. For Pleiades II it was clear I needed individual power

control for each node.

I decided to reuse some old components I had had for years, in fact mostly parts from a power-supply of a retired DEC PDP11-04, plus a few other things. An elaborate power-sequencing scheme was ruled out as 1) we have plenty of electrical power available, and 2) 8 nodes running at eg. 200W each won't exactly be all that stressing. Even if they were all to be turned on at the same time. do it the right way. power supply is a chapter by itself.

Networking

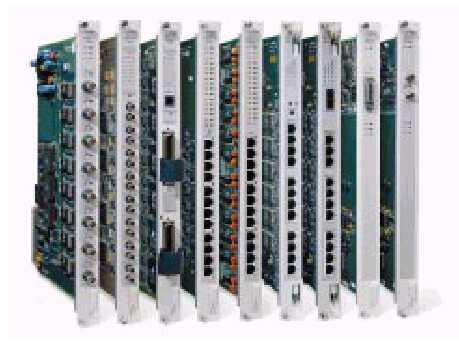

The first network switch, a 48-port Synoptics LattisNet 3000 Concentrator was purchased cheaply from a company I worked for

at the time. Unfortunately the chassis is quite bulky (see picture) and the overall assembly is also quite noisy.

I quickly opted to replace it with a

3COM Link Switch 1000, also bought on eBay. This has 24 x 10Mbps ports plus 1 x 100Mbps uplink.

The first network switch, a 48-port Synoptics LattisNet 3000 Concentrator was purchased cheaply from a company I worked for

at the time. Unfortunately the chassis is quite bulky (see picture) and the overall assembly is also quite noisy.

I quickly opted to replace it with a

3COM Link Switch 1000, also bought on eBay. This has 24 x 10Mbps ports plus 1 x 100Mbps uplink.

Note: the Synoptics LattisNet switch is for sale. See the complete specs.

Node installation

Pleiades II boots and installs off the network, primarily based on etherboot, initrd and rsync. I am not using NIC bootroms, but boot the Etherboot-images from floppy-disk instead. A simple cost-comparison: 8 new floppy-drives @ CHF16 plus 8 floppy-disks versus 8 EPROMs plus an EPROM-burner (as I don't have one) - the floppy-drives + floppies won. For a larger cluster I suspect buying and programming bootroms will be more advantageous, especially when maintenance costs are taken into account.

Etherboot and 720-K floppy-disks

For the initial testing I used regular 3,5" 1.44Mb floppy-disks. Nothing unusual about that. However, for the cluster itself, I dug out a (somewhat ancient) pack of 3,5" 720kb floppy-disks - ie. single-density. I doubt if you could buy these in a high-street store any longer, but they were perfectly adequate for my purposes.

Except, Etherboot in the standard version (e.g. from www.rom-o-matic.com) doesn't work on single-density 3,5" floppy-disks - at least not on the ones I had. I went through several revolutions of testing floppy-disks in various floppy-drives, but could not get a 3,5" 720K floppy-disk to boot. I always ended up with "Rd err". As e.g. www.rom-o-matic.com does not appear to provide images for 720K floppy-disks, to overcome this minor hurdle you'll have to build the necessary boot-image from source. In the Pleiades case:

- edit the Makefile to change the DISKLOADER setting from "bin/boot1a.bin" to "bin/floppyload.bin".

- make bin32/3c509.lzdsk

The problem regarding non-1.44Mb floppy-disks is mentioned in the Etherboot FAQ, (which is where I got the hint from) but it is not completely clear what you need to do.

td#changed2 { font-size: 80%; font-style: italic; border-style: solid none none none; border-width: 1px; vertical-align: top; } table#changed1 { clear: both; width: 100%; }